The Voice Season 21 Finale - Coldplay "My Universe" ft. BTS

When Coldplay and BTS came together for their 2021 smash single “My Universe”, their musical styles weren’t the only things blended together to achieve something spectacular.

The track’s music video leveraged 3D volumetric capture to record each member from every angle, allowing the supergroups to appear together despite performing from half a world away.

We came in to take this one step further: bridge the gap between worlds by bringing the video’s holograms into the real world through augmented reality (AR).

Why Real-Time:

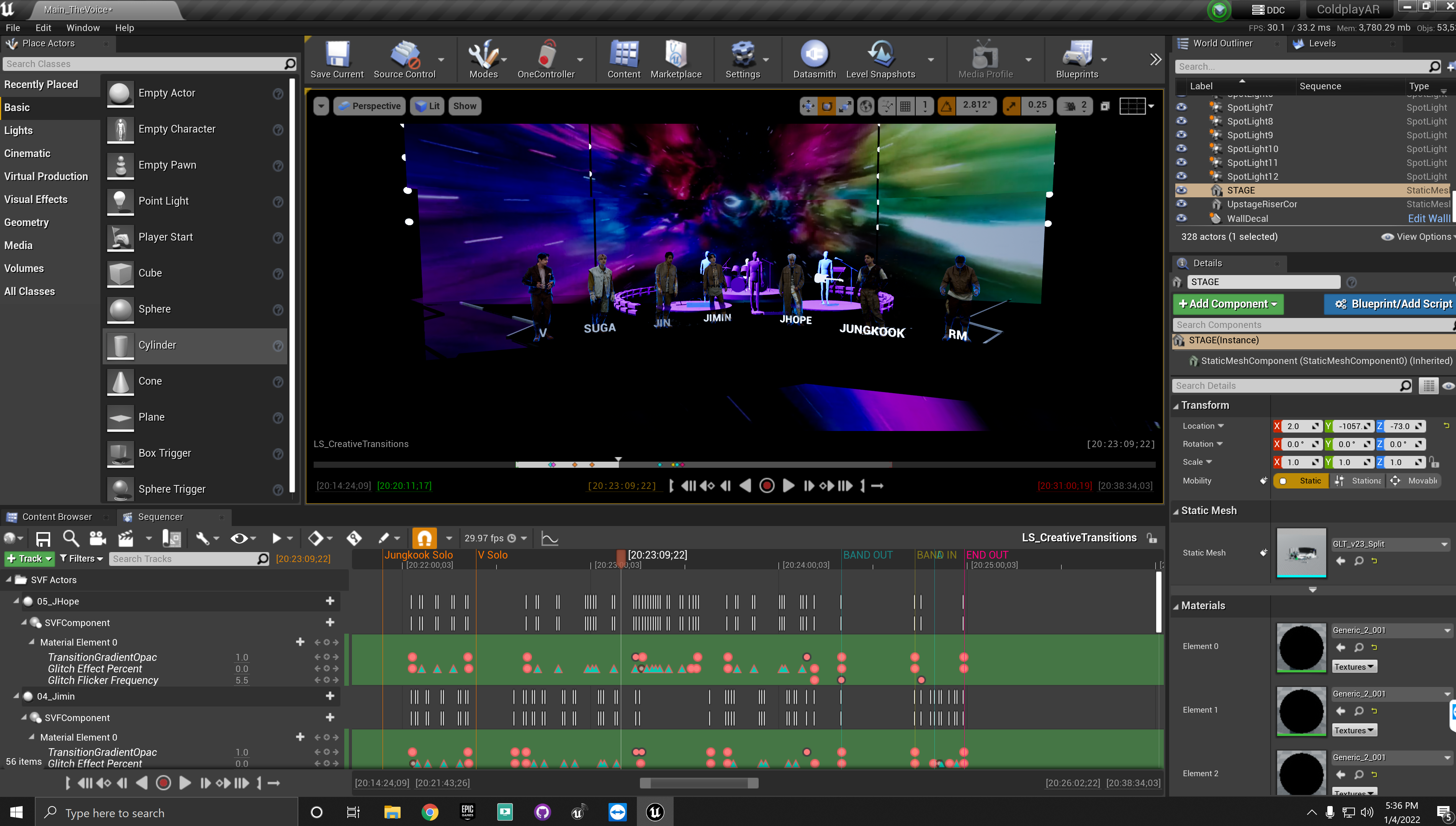

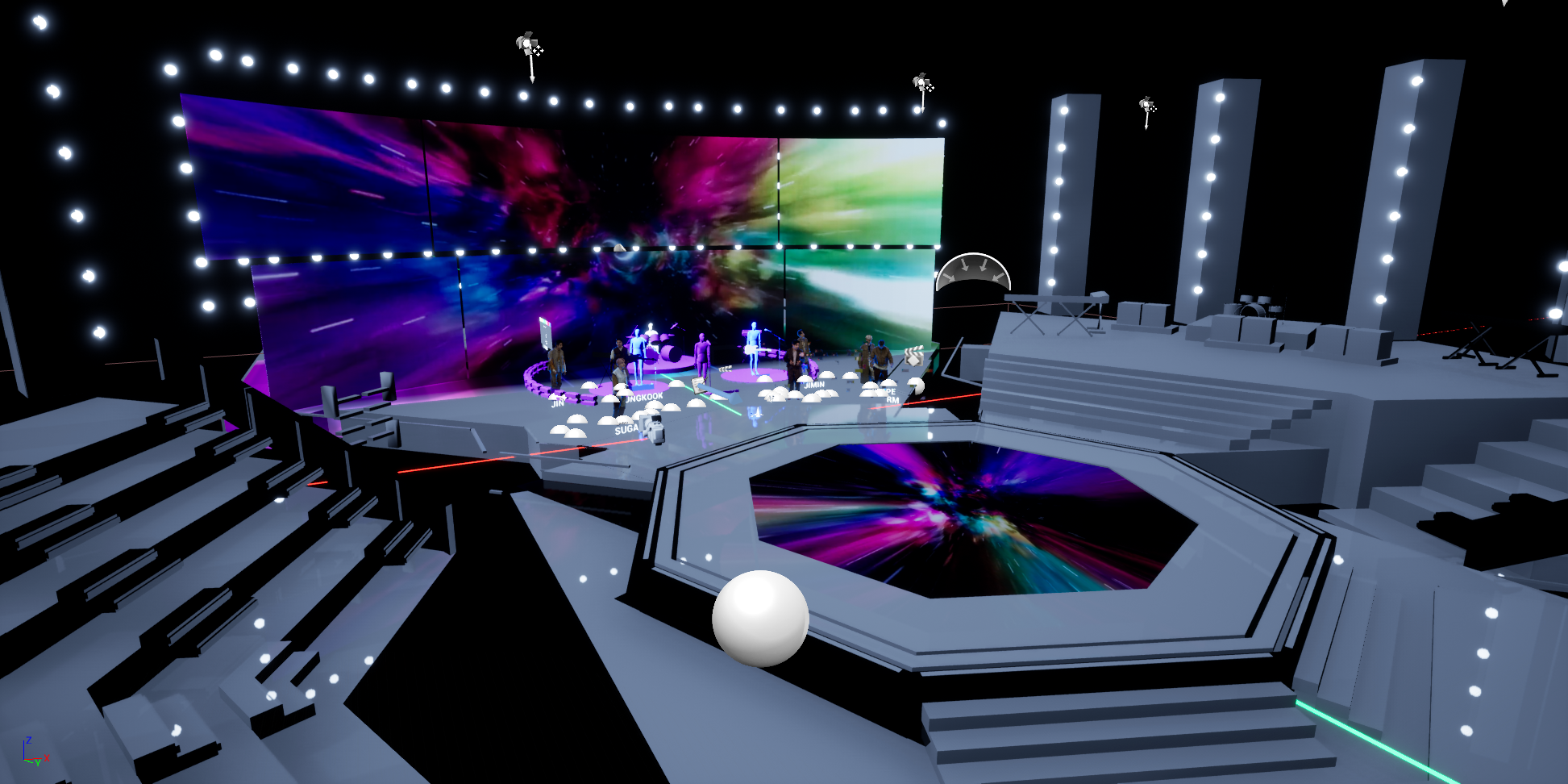

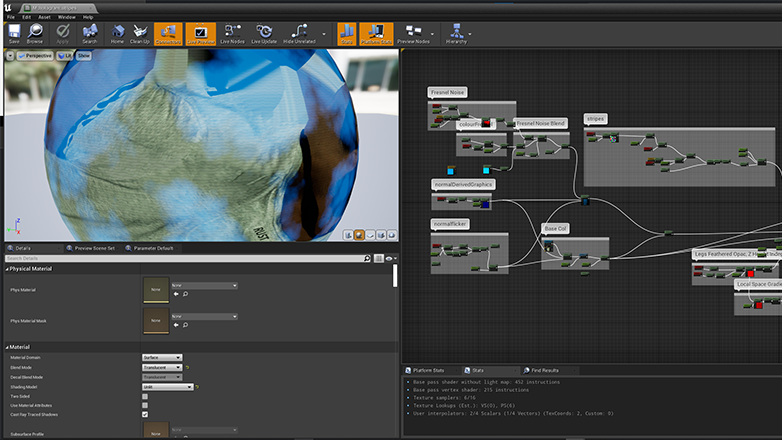

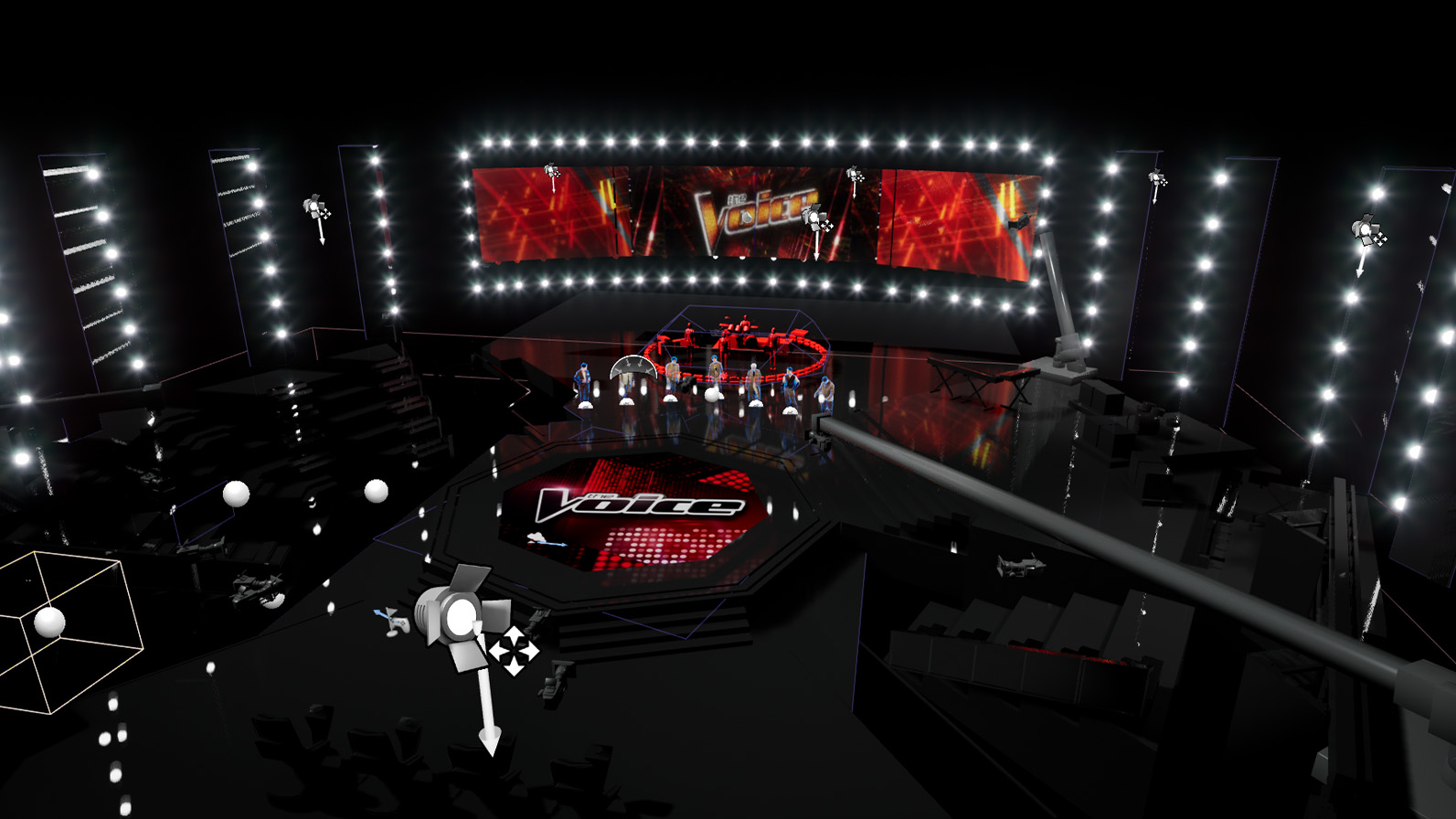

Rather than utilize a pepper’s ghost effect most known from 2Pac’s Coachella “appearance”, combining Dimension Studios’ volumetric capture data with Unreal Engine enabled a real-time workflow offering several advantages over a post-heavy pipeline while preserving a live performance’s energy.

Optimizing for real-time playback with Unreal Engine meant that a full pre-visualization process was possible without any performer digital or otherwise setting foot onstage. Additionally, near-instant adjustments to AR performers’ position and timing could be made on site as well as in post-production.

Volumetric video was similarly critical to our success, capturing every subtlety of the performers for playback without necessitating deep dives into animation pipelines. It also facilitated dynamic camera movements and streamlined post-production by allowing certain shots to be recomposited.

Challenges:

As with any disruptive workflow, innovative solutions are needed for persistent problems.

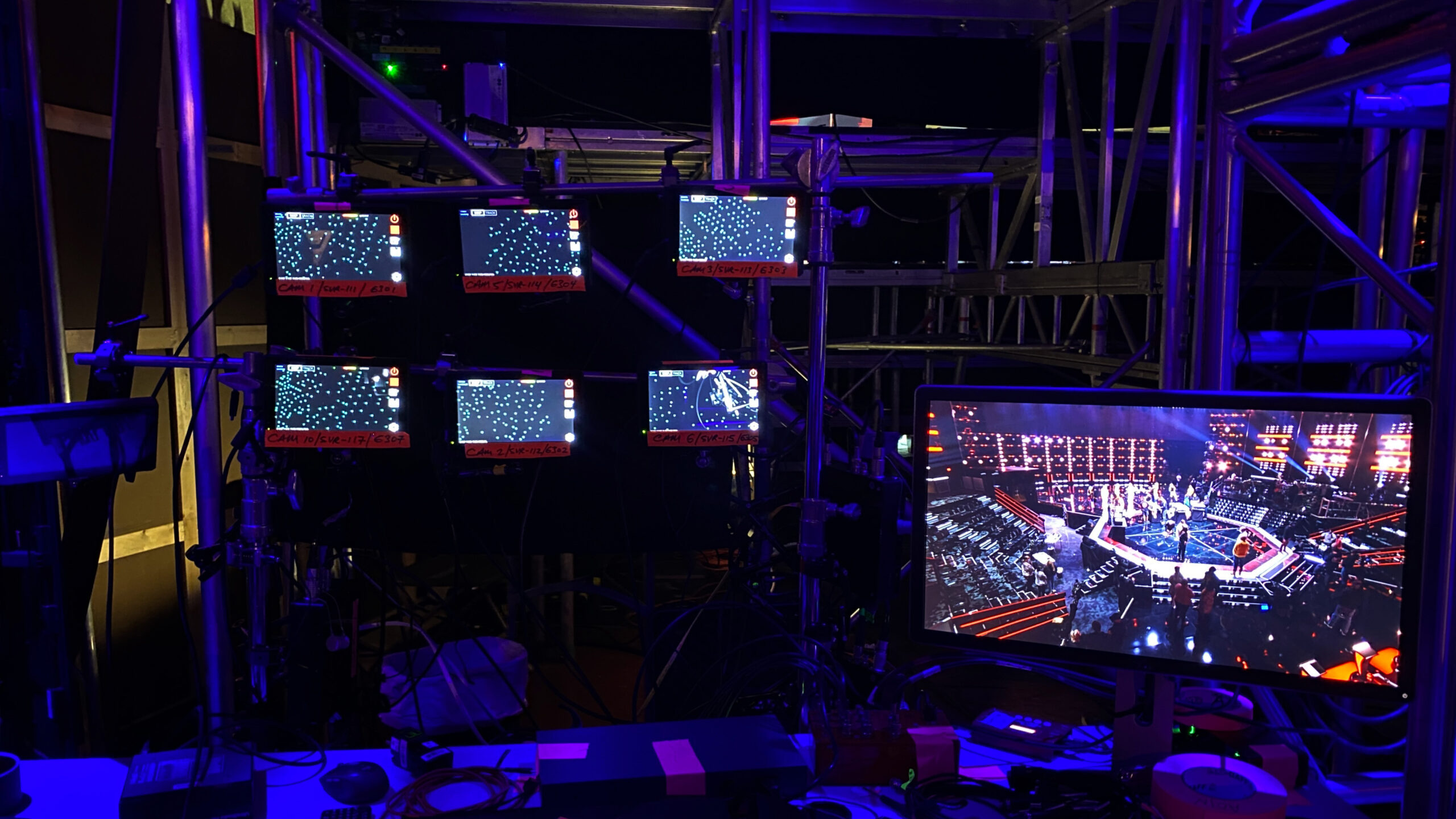

In this case, we needed to devise a method for synchronizing a massive amount of real-time data across multiple cameras in order to maintain the 3D hologram effect.

AOIN consulted with Microsoft to review the volumetric plugin’s source code, which allowed us to add custom functionality necessary to keep each AR performer synchronized to timecode. By dedicating a Silverdraft server for each of seven cameras, directors could follow traditional directing workflows for determining shots to use complete with AR elements.

What’s Next:

Our work with The Voice represents a lot of firsts, not least of which is the first time the show incorporated augmented reality into its presentation.

When it comes to production, data throttling issues which held back similar performances can now be overcome. For this project, by using Microsoft’s volumetric technology, we are able to compress large amounts of data into a format that is able to be played back in real-time, while maintaining once-unattainable levels of detail. Because of these advancements, we are now able to bring archival quality volumetric assets into a live broadcast environment.

Services Rendered

Previsualization

Plugin Source Code Modification

Volumetric Video Integration

AR playback via Stypeland

System Engineering

Tracked Camera Data Recording

Post Production Renders

AOIN Team Credits

Executive Producer - Danny Firpo

Production Manager - Nicole Plaza

Technical Director - Berto Mora

Senior UE4 Technical Artist - Jeffrey Hepburn

Senior AR Engineer - Neil Carman

AR Engineer - Preston Altree

Associated Parties

Epic Games

Stype

Warner Music Group

Coldplay

BTS

The Voice

1651 S Central Ave, Suite F, Glendale, CA 91204

info@staging.allofitnow.com

(415) 525-4215

1651 S Central Ave, Suite F, Glendale, CA 91204

info@staging.allofitnow.com

(415) 525-4215

1651 S Central Ave, Suite F, Glendale, CA 91204

info@staging.allofitnow.com

(415) 525-4215

1651 S Central Ave, Suite F, Glendale, CA 91204

info@staging.allofitnow.com

(415) 525-4215